It’s true that nowadays AI is everywhere—just not always where you can see it.

Executives want AI to accelerate the business. Employees want to use tools that help them work smarter. IT and security teams want to keep the environment secure and compliant.

All three are valid goals, yet they often clash in ways that create blind spots.

One way or another, AI tools enter your organization quietly through personal accounts, browser extensions, and team-level experimentation.

This growing layer of shadow AI isn’t inherently harmful, but it is unknown. And unknown activity is difficult to govern.

AI’s Promise and Its Blind Spots

AI is reshaping how work gets done. Employees use it to write faster, analyze information, build presentations, generate ideas, and automate tasks that used to take hours. In many cases, these tools are boosting productivity and helping teams move at a pace that simply wasn’t possible a few years ago.

The same speed that makes AI so valuable is also what creates its biggest challenge: adoption outpacing oversight. 8 out of 10 employees are using unapproved AI tools. Not out of defiance, but because sanctioned tools aren’t always available, easy to use, or fast enough to keep pace with what their work demands.

But the rush to experiment has outpaced the guardrails needed to use AI responsibly. Most organizations still lack clear policies, consistent governance, or visibility into which GenAI tools employees are using.

This creates an expanding set of blind spots:

- Unmonitored data flowing into third-party AI tools

- Employees unknowingly exposing proprietary content

- AI-generated outputs introducing bias or accuracy issues

- Regulatory and compliance obligations that remain unmet

The potential of AI is immense. But so is the responsibility that comes with it, especially for IT and security teams.

AI Tug-of-War: Innovation vs. Risk

Shadow AI doesn’t happen because employees want to bypass rules. It happens because they are trying to keep up. With hundreds of AI tools launching every month, employees naturally gravitate toward the quickest, most intuitive option, even when it’s not officially sanctioned.

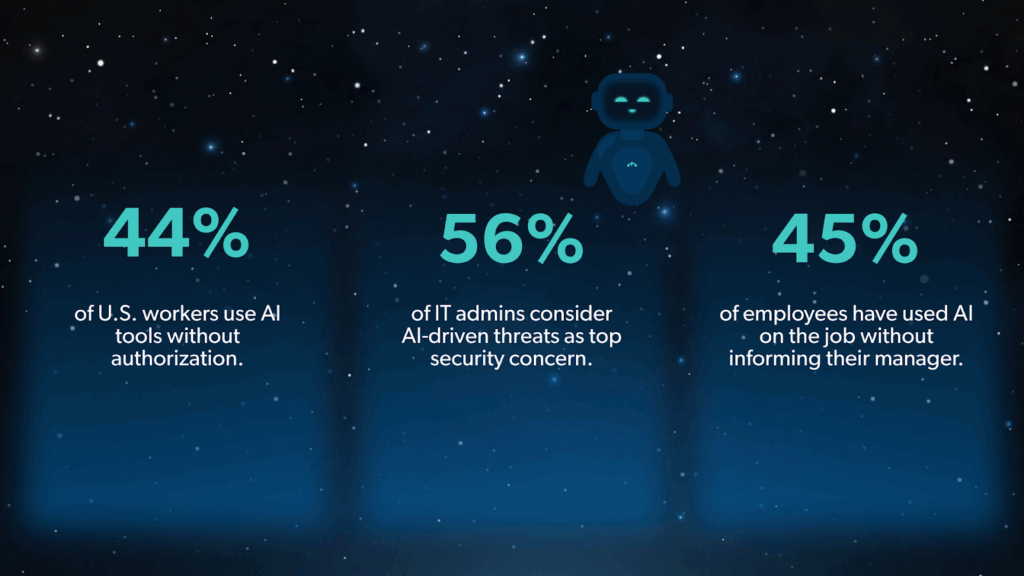

The data reflects this tension:

- 44% of U.S. workers use AI tools without authorization.

- 56% of IT admins consider AI-driven threats as their top security concern.

- 45% of employees have used AI on the job without informing their manager.

This creates an organizational tug-of-war… Employees want AI that helps them move faster, and IT and security teams must manage risk, compliance, and data protection.

The imbalance is accelerating as the gap between innovation and security expands. Most companies haven’t yet defined what “responsible AI adoption” looks like, how data should flow through AI systems, or where experimentation should happen safely. Governance frameworks, risk controls, and employee education simply haven’t kept pace with the speed of AI adoption.

As a result, organizations are pulled in two directions: the desire to innovate, and the need to protect.

Shadow AI lives in the gap between those two forces.

From Shadow IT to Shadow AI

Shadow AI didn’t appear out of nowhere. It’s the next step in a pattern we have seen before.

When organizations adopted cloud services in the past, employees often reached for their own tools to solve problems quickly. That behavior created what became known as shadow IT. The same thing is happening now with AI; only the stakes might even be higher.

AI tools don’t simply hold data. They interpret it, reshape it, generate new content, and sometimes make decisions without much oversight. That means the risks extend beyond unauthorized software to include how information is processed, combined, and shared.

A few examples illustrate the shift from shadow IT to shadow AI:

- Employees may use public AI tools to draft documents or analyze information, without knowing where the data goes.

- Personal accounts can become a gateway for business activities.

- Generative tools can produce outputs that look correct but contain errors or confidential detail.

- Teams might adopt AI assistants long before the organization evaluates them.

In short, the behavior is familiar, but the impact is different. The evolution from shadow IT to shadow AI reflects a fundamental change in the organizational risk landscape. While the instinct to move fast remains the same, the impact of generative AI tools—interpreting, reshaping, and exposing proprietary data—is far greater.

Now that you’ve reviewed how the stakes have escalated, take this short, four-question survey to gauge your team’s perspective and readiness for this next-generation challenge.

Building AI Governance That Actually Works

Most organizations agree they need some form of AI governance. The challenge is turning that idea into something people can follow in real day-to-day work. Policies alone don’t solve the problem, and step-by-step rules rarely keep pace with how quickly new tools appear.

Effective governance starts with three things: clarity, consistency, and context.

- Clarity: People Should Know What’s Allowed and Why

Employees adopt AI tools because they make work easier. If alternatives feel slower or unclear, they will find their own solutions. Governance only works when everyone understands the reasoning behind it. That means:

- Explaining how data is expected to be handled

- Defining where AI can support work and where it shouldn’t

- Setting expectations for accuracy, review, and human oversight

Organizations that don’t explain why guardrails are in place tend to see more workarounds, not fewer.

- Consistency: Policies Need to Match How You Operate

This is where many governance efforts fall apart. A policy written in legal terms won’t help someone who needs quick guidance at the moment they are drafting content or reviewing customer information.

To be useful, governance should:

- Apply the same principles across teams

- Use language employees recognize

- Include clear process for approval or exception

- Link everyday tools and workflows

If AI governance doesn’t fit into how people actually work, it gets ignored, and shadow AI grows.

- Context: One Size Doesn’t Fit All

Not all companies should adopt AI at the same pace or with the same level of control. The right approach depends on maturity across security, data management, regulatory exposure, and culture.

Examples:

- High-maturity teams implement structured experimentation: employees can innovate, but only within clear, secure, and monitored boundaries.

- Lower-maturity teams may lack formal guardrails, which means experimentation can happen more freely but carries higher risk.

The goal is to create an environment where responsible experimentation can happen without exposing your organization to unnecessary risk.

Bring Light to “AI in the Shadows” by Empowering Employees

All of these governance efforts rely on visibility. Without knowing which AI tools employees are actually using, policies and guardrails remain theoretical.

JumpCloud’s shadow AI discovery identifies the GenAI applications employees adopt across your organization, tracks usage patterns, and surfaces departmental activity before problems arise. IT teams can take pre-discovery actions, approving or restricting tools early, ensuring governance is proactive rather than reactive.

With this level of insight, you can enforce policies effectively, generate reports for compliance, and support employees in safely leveraging AI. Once visibility is set, you can focus on other key actions:

- Build confidence and transparency: Clearly communicate AI’s role and how workflows and responsibilities evolve. Let them know that AI is there to accelerate their efforts, not replace them.

- Enable safe experimentation: Approve or restrict AI tools before full discovery, creating a secure sandbox for testing and learning. Communicate a narrative from a leadership perspective about your company’s AI vision. Reward employees for experimenting with AI and finding new ways to leverage them.

- Justify AI investments: Monitor usage patterns and adoption trends to sanction the right tools that your employees will actually use.

- Simplify access to approved tools: Centralize resources around AI and make AI solutions easy to find and integrate.

Ready to uncover shadow AI tools in your organization? Discover JumpCloud AI & SaaS Management today.