Effective SaaS management starts with a deceptively simple question: What exactly counts as SaaS?

The answer to this question is critical because it dictates the entire security and governance workflow for IT leaders. The moment a site is classified as an application, it triggers actions: applying security policies, tracking licensing, and enforcing user governance.

If the initial classification is wrong, the entire downstream management process is flawed.

This is why the integrity of our application catalog is paramount. Our engineering objective is not just inclusion. It’s also exclusion. We actively prevent non-SaaS sites (like social media, banking platforms, or news outlets) from entering the catalog because we don’t track or manage private consumer data on those platforms. Let’s discuss it in detail.

The Core Challenge: Maintaining Catalog Integrity at Scale

Manually vetting thousands of domains is time-consuming, slow, and unscalable, introducing significant lag and inconsistency into our discovery pipeline. This lack of certainty hinders our ability to deliver the comprehensive, up-to-date catalog our customers expect.

To solve this critical challenge, we built an AI-powered validation engine. Its job is to continuously expand our catalog while, most importantly, strictly policing its boundaries using consistent criteria.

To deliver a comprehensive and accurate catalog, we had to overcome three non-negotiable engineering challenges:

1. Scale and Speed

Manually reviewing thousands of potential domains took a dedicated engineer weeks and was prone to human error. We needed a system that could analyze domains in parallel, deliver reliable classification in minutes, and ingest vast amounts of data without slowing down development cycles.

2. Consistency Through Definition

The definition of “SaaS” is often subjective. Different team members using different criteria lead to inconsistencies that erode trust. By embedding a clear, rules-based definition directly into our AI’s prompts, we ensure every potential website is judged by the exact same strict criteria.

3. Deeper Insights and Metadata

A simple classification isn’t enough for IT administrators who need to manage security and licensing. We needed the system to automatically extract valuable metadata, such as the application’s name, description, category, and logo, to instantly enrich our catalog and power downstream analytics.

These constraints demanded a fundamental shift from manual review to a machine-driven validation pipeline.

The Transformation: 50x Gains in Efficiency

Shifting our catalog validation to an AI-first approach has been transformative.

What once took an engineer over a week to manually review and classify just 500 domains, the new AI engine can now process over 700 domains in under an hour on a standard development machine. This massive leap in efficiency has enabled us to analyze over 25,000 unique domains already.

Key Performance Metrics:

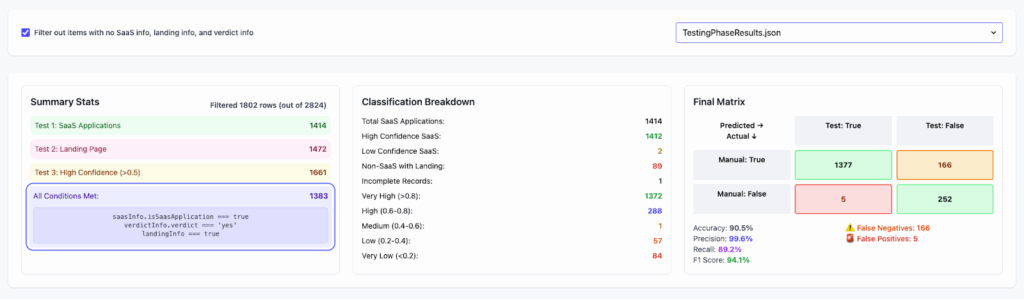

- Overall Accuracy check mark button: 90.5%

- Precision bow and arrow: 99.6% (This means we have a very low rate of incorrectly identifying a website as a SaaS product).

- Recall magnifying glass tilted left: 89.2% (We successfully identify the vast majority of true SaaS applications).

- Cost Efficiency money bag: The average cost to analyze one domain is just $0.0057 using Gemini 2.0 Flash.

Key Results:

We have tripled the number of applications that are included in our SaaS app catalog!

- Total analysed data gear: 25K

- Number of SaaS apps thinking face: 11K

- Added into our Catalog check mark button: 2.5K

In a real-world scenario, only analyzing the 25K applications would take around 11.5 months for a single engineer. With the power of AI, this only took 1.5 days.

These results prove that our AI-driven approach not only delivers incredible scale but also maintains a high standard of quality, fundamentally changing how we build and expand our product catalog.

(This visualization shows true positives (TP), false positives (FP), false negatives (FN), and true negatives (TN) for SaaS detection.)

How We Did It

Key Technologies Used:

- Browser Automation: Playwright

- AI Integration: A custom library built on Google’s Gemini 2.0 Flash

- Backend & Reporting: Node.js, Express

- Programming Language: TypeScript

At its core, the system uses Playwright for browser automation and Google’s Gemini 2.0 Flash as its analytical brain. Here’s the workflow breakdown:

The pipeline starts by ingesting vast CSV domain lists, cleaning the data, and preparing thousands of domains for parallel processing.

Our script uses Playwright to navigate to each website. To handle dynamic, JavaScript-heavy sites, we rely on advanced load state commands (waitForLoadState(‘networkidle’)). This ensures all necessary content is fully rendered before we initiate analysis.

The extracted content is fed into the Gemini 2.0 Flash model, where we enforce our strict classification rules. The AI performs a multi-prompt classification for each domain, simultaneously evaluating:

- Prompt 1 (Classification): Is this SaaS or not?

- Prompt 2 (Evidence): Does this look like a product landing page (e.g., pricing, sign-up flow)?

- Prompt 3 (Confidence): What is the model’s certainty level?

The core of our catalog integrity depends on Strict Exclusion Criteria embedded in the prompt. We instruct the AI to act as an “expert software analyst” and explicitly list what is not SaaS. For instance, the AI must mark a site as false if it is exclusively a social media platform, a news site, an e-commerce store, or a general blog.

We cross-reference every AI classification against a curated set of manually verified datasets. This non-stop process compares the AI’s output against an expert-verified dataset. This critical step continuously validates our 99.6% precision rate and provides the necessary feedback loops to fine-tune the model.

Moving Forward with Confidence

SaaS management depends on clarity; knowing which applications truly qualify as SaaS, and being confident in the data that drives decisions. By building an AI-driven validation engine, it’s proven how automation and verification can work hand in hand by scaling discovery without sacrificing accuracy.

If your organization is looking for greater clarity and confidence in shadow IT discovery, explore JumpCloud SaaS management and see the validation engine you’ve read about here at work, helping you eliminate all the blind spots in your SaaS environment.