While the IT sector’s focus on virtualization technologies has significantly increased over the past couple of years, the concept has been with us for much longer. As early as the 1960s, IBM was already using virtualization to develop robust time-sharing applications. In recent times, however, organizations are increasingly using the technology to enhance IT agility while creating significant cost savings.

Increased performance, greater workload mobility, and automated operations are some of the benefits that virtual machines (VMs) provide, making IT simpler to manage and less costly to operate. In this post, we’ll explore what a VM is, how VMs work, their advantages and disadvantages, and other terminology associated with virtualization.

Definition of a Virtual Machine

A VM is a computer file that behaves like an actual physical personal computer (PC). It has its own CPU, memory, storage, and network interface card (NIC) — created from a physical server — that allows it to run its own operating system (OS) and application environment separate from the dedicated hardware.

While the parts (CPU, memory, storage, and NICs) that make up your PC are physical and tangible, VMs are software-defined computers or virtualized computers within physical hosts, existing only as code. Since VMs are abstracted from the rest of dedicated hardware, many of them can exist on a single piece of hardware. You can also move them easily between host servers depending on demand and efficiently use the dedicated hardware’s resources.

VMs also allow multiple different OSes to run simultaneously on a single hardware system. For example, you can run Windows, Linux, and macOS on the same PC, and each OS runs precisely as it would on the host hardware. As such, the end-user experience emulated within a VM is nearly identical to that you’d expect on a physical machine.

How Does a Virtual Machine Work?

VMs are created through a process called virtualization, which uses software — also known as a hypervisor (explained next) — to separate the physical hardware resources from the virtual environment. Think of a virtual environment as the IT services that need to use the physical hardware’s resources.

You can install the hypervisor on top of an OS or directly onto the hardware. When installed, the hypervisor takes charge of the computing resources and divides them up to be used in multiple VMs.

Because the VMs operate much like physical computers, you can run multiple OSes on a single machine (also called the host). During these operations, the hypervisor allocates hardware resources to each VM on an as-needed basis. The flexible resource allocation and mobility of the VMs simplify IT operations, making them efficient and cost-effective. These are also the reasons that have made virtualization the foundation for cloud computing.

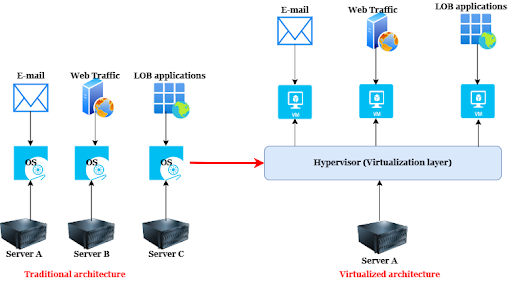

To illustrate further how virtualization enhances efficiency and cost-effectiveness, consider the following scenario:

A company has three servers: server A (supports email), server B (supports web traffic), and server C (supports line-of-business applications). Because the company only uses each server for a dedicated purpose, it can’t optimize its computing capacity. For example, although the company pays 100% of the servers’ maintenance costs, it may only be utilizing a third of its capacities.

With virtualization, the company could split one of the three servers into three VMs and reduce maintenance costs by 66%. For example, it could split server A into three VMs to handle email, web traffic, and line-of-business applications. The company could then retire servers B and C to save on maintenance costs or repurpose them for other IT services.

The following diagram shows a side-by-side comparison between traditional and virtualized architecture as described in the scenario above.

What Is a Hypervisor?

A hypervisor is an application that isolates virtual environments from the underlying physical hardware. This isolation allows the underlying hardware resources to operate multiple VMs as guests, enabling them to share the system’s computing resources effectively. There are two principal types of hypervisors:

Type 1 hypervisors

A type 1 hypervisor — also called a bare-metal hypervisor — runs directly on the underlying server. Type 1 hypervisors operate much like a typical OS running VMs, albeit with minimal functionalities. These hypervisors are highly efficient when used in server environments because they have direct access to the host’s resources. Examples of type 1 hypervisors include Microsoft Hyper-V, VMware ESXi, and Kernel-based Virtual Machine (KVM).

Type 2 hypervisors

Type 2 hypervisors — also called hosted hypervisors — don’t run directly atop the underlying server. Instead, they run as applications in an OS. Type 2 hypervisors are suitable for individual users that want to run multiple OSes on their PCs. Examples of such hypervisors include VirtualBox, Parallels Desktop for Mac, and VMware Workstation Player.

Advantages of Virtual Machines

VMs have several benefits. Below are a few of them:

- They provide the foundation for cloud computing. VMs are fundamental building blocks of cloud computing, powering different workloads in an efficient and scalable manner.

- They can help the company to test new OSes and applications. Organizations can test-drive new OSes and applications in VMs without affecting the host’s operating system.

- They are a great way to support software development and IT operations (DevOps). Enterprise developers can easily create and configure VMs to help fast-track software development lifecycle processes. They can create VMs to cater to specific tasks such as static software testing.

- They can allow enterprises to run legacy software in cloud-based environments. Providing access to on-premise legacy applications in cloud-based environments may be time-consuming and complex. Organizations can efficiently deliver such applications with application virtualization, allowing users to access them through web browsers in a cloud-based environment.

- They provide flexibility in disaster recovery. Because virtualized servers are independent of the underlying physical hardware, an organization doesn’t require the same dedicated servers at the primary site as its secondary disaster recovery location.

- They can enhance security. A virtualized server makes it easier for IT teams to isolate mission-critical applications because it creates a hardware abstraction layer — often called sandboxing — between the OS and the hardware.

Disadvantages of Virtual Machines

Now that we’ve examined the advantages of VMs, let’s take a look at some of their disadvantages.

- VMs may perform less efficiently than physical hosts. VMs are less efficient than physical machines because they access the hardware indirectly. For example, running a VM on top of the host’s OS requires the virtual machine to request access to resources through the host’s operating system, potentially impacting the speed of operations.

- An infected host can affect all the VMs. While a properly-structured VM cannot infect the host, a weak host server can affect all VMs running on top of it. This can happen when there are bugs in the host’s OS.

Types of Virtual Machines

There are two basic categories of VMs.

Process VMs

A process VM allows users to run a single process as an application on the physical host. An example of such a VM is the Java Virtual Machine (JVM) which allows any system to run Java applications.

System VMs

A system VM is a full-featured VM designed to provide the same functionalities as a typical physical machine. It utilizes a hypervisor to access the underlying host’s computing resources.

What Are the Different Types of Virtualizations?

There are six major categories of virtualizations:

1. Server virtualization

Server virtualization is a process that splits a physical server into multiple isolated VMs (also called virtual servers) through a hypervisor. Each virtual server serves as a unique physical device capable of running its own OS. Server virtualization is an efficient and cost-effective way to use server resources and deploy IT services in an organization.

Without server virtualization, physical servers only utilize a small portion of their processing capacities, resulting in idle devices. A data center can also become overcrowded with numerous underutilized servers that waste space, resources, and electricity.

2. Storage virtualization

Storage virtualization — also called software-defined storage (SDS) or virtual storage area network (SAN) — is the process that pools physical storage from multiple storage devices and makes them appear as a single unit. Unlike storage hardware from different vendors, networks, or data centers that cannot interoperate, storage virtualization creates an integrated pool that you can easily manage from a single console by software.

Virtualizing storage isolates the underlying storage hardware infrastructure from the management software, providing more flexibility and scalable storage pools that VMs can use. Virtual storage is an essential component for IT solutions such as hyper-converged infrastructures (HCIs) because it allows IT administrators to streamline storage activities like archiving, backup, and recovery.

3. Network virtualization

Network virtualization is a process that divides the available bandwidth into multiple independent channels, each of which you can assign to a VM as needed. Network virtualization allows VMs to attain pervasive connectivity and simplifies the entire network infrastructure management.

For example, you can programmatically create, provision, and manage the entire network infrastructure through software, including load balancing and firewalling. There are two approaches to network virtualization.

Software-defined networking (SDN)

It abstracts the physical network resources such as switches and routers through software. With the SDN approach, network administrators use the software to configure and manage network functions, creating a dynamic and scalable network.

Network functions virtualization (NFV)

Unlike the SDN approach, which focuses more on isolating network control functions from forwarding functions, NFV virtualizes the hardware appliances, providing dedicated functions such as load balancing and traffic analysis. NFV allows the network to grow without requiring the addition of more network devices.

4. Application virtualization

Application virtualization is a process that encapsulates an application from the underlying OS on which it runs. Application virtualization allows users to access and use applications without requiring them to install them on their target devices.

IT administrators can use application virtualization to deploy remote applications on a server and deliver such applications without having to install them to end-users’ devices. The virtualization software essentially streams the application as individual pixels from the server to end-user devices by using a remote display protocol such as Microsoft’s Remote Desktop Protocol (RDP).

Users can then use the application as if it were installed locally. Application virtualization makes it simpler for IT teams to update corporate applications and roll out patches.

5. Desktop virtualization

Desktop virtualization is a technology that isolates the logical OS instance (desktop environment and its applications) from the clients that are used to access it. There are two primary ways that you can use to achieve desktop virtualization.

Local desktop virtualization

With local desktop virtualization, the OS runs on the client device as a virtualized component, and all processing and workloads occur on the local hardware. Local desktop virtualization is appropriate in environments where users don’t have continuous network connectivity.

Remote desktop virtualization

With remote desktop virtualization, users run OSes and applications through client devices such as laptops, smartphones, or thin clients from servers inside the data center. Remote desktop virtualization gives IT teams more centralized control over corporate applications and desktops. It can also maximize the company’s returns through remote access to shared computing resources.

Virtual desktop infrastructure (VDI) and desktop-as-a-service (DaaS) offerings are popular desktop virtualization that use VMs to deliver virtual desktops to different devices.

What Is the Difference Between Virtual Machines and Containers?

Like virtualization, you can also use the containerization approach to host applications on computers. However, while virtualization allows you to run multiple OSes on a single physical host, containerization enables you to deploy various applications through the same OS on a single VM or server.

Containerization is a lightweight option for virtualization. Instead of shipping entire OSes and applications, you encapsulate your code together with related libraries, configuration files, and dependencies needed for it to run. This single package of the software — also called a container — is abstracted from its host OS and can run on any platform without any issue.

Therefore, containers can use the host’s operating system instead of installing an OS for each VM. VMs are great at supporting applications that require the OS’s full functionality. You can also use VMs when you have many OSes to manage on a single server. On the other hand, containers are a great choice when your priority is to reduce the number of physical servers you’re using for multiple applications.

Virtual Machines in Cloud Computing

Virtualization and cloud computing are often considered similar technologies because they all establish useful computing environments from abstract resources. Put simply: virtualization is a technology that makes cloud computing possible because it allocates abstracted resources that cloud service providers (CSPs) can deploy and manage through software.

It enables CSPs to serve organizations with their existing powerful physical hardware where cloud users only purchase the computing resources on an as-needed basis with service models such as infrastructure-as-a-service (IaaS), bare metal cloud, as well as platform-as-a-service (PaaS) or software-as-a-service (SaaS). Organizations can scale IaaS, PaaS, SaaS, and other resources cost-effectively as their workloads expand.

However, despite the benefits that virtualization and cloud computing bring to organizations, there are also added security risks when employees access virtualized resources from multiple heterogeneous devices. The JumpCloud Directory Platform® is an inclusive enterprise-grade, cloud-based directory platform that organizations can leverage to support identity and access management (IAM) in cloud-based environments.

IT administrators can use JumpCloud to authenticate users to corporate resources, enabling them to know which endpoint is connected to the environment, who owns it, and what their access level is for any VM. Learn more about how to automate server management from the cloud.